Programming

How Analog In-Memory Computing is Transforming Transformer Models

Analog in-memory computing is innovating transformer models for faster, energy-efficient, and scalable AI.

4. 2. 2025

1. Why Transformer Models Need a New Approach

The explosion of artificial intelligence (AI) applications—from natural language processing to image generation—has been fueled by transformer models. Despite their impressive capabilities, these models are computationally intensive and demand vast amounts of energy, highlighting the limitations of traditional digital computing. Enter analog in-memory computing (AIMC): a disruptive approach that promises to accelerate transformer models by reducing data movement and energy consumption while improving performance. This guide explores how AIMC is poised to transform AI hardware.

2. Understanding Transformer Models and Their Challenges

What Are Transformer Models?

Transformer models leverage self-attention mechanisms and deep, layered architectures to capture long-range dependencies in data. They have set new benchmarks in tasks such as language translation, text generation, and image processing, making them indispensable in modern AI applications.

This image illustrates a Transformer-based sequence processing mechanism, particularly focusing on token embeddings and attention relationships.

Computational and Energy Demands

Transformer models rely heavily on intensive matrix-vector multiplications and large-scale parameter updates. As these models grow—often reaching billions of parameters—the computational requirements become a major bottleneck, resulting in:

High Energy Consumption: Repeated data movement and high-precision calculations drain energy resources.

Latency Issues: Traditional digital processing can introduce delays that affect real-time applications.

Scalability Limits: Increasing model sizes strain existing hardware, slowing down innovation.

3. The Limitations of Conventional Digital Computing

Data Movement Bottlenecks

In digital computing systems, data is constantly transferred between memory and processing units. This repeated movement not only consumes energy but also slows down processing speed, especially in systems running large-scale transformer models.

Scaling Issues and Energy Costs

As the size of AI models increases, so do the demands on digital systems. The traditional von Neumann architecture struggles to keep pace, leading to increased operational costs and energy consumption, which are significant challenges for deploying AI at scale.

Image of anothet example of Signal and noise extraction from analog memory elements for neuromorphic computing

4. What is Analog In-Memory Computing (AIMC)?

Fundamental Concepts

Analog in-memory computing shifts the paradigm by performing computations directly where the data is stored. Instead of moving data to a central processor, AIMC utilizes the physical properties of memory devices (such as voltage and current) to compute in place. This in situ computation reduces the energy and time typically lost in data shuttling.

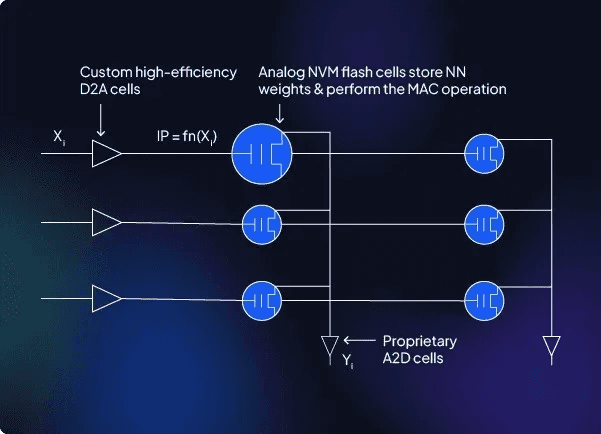

This diagram represents an analog computation framework leveraging an array of ESF3 cells to perform in-memory processing, potentially for neuromorphic computing or efficient analog matrix-vector multiplications.

Key Device Technologies

Several emerging technologies are central to AIMC:

Resistive Random Access Memory (ReRAM): Uses variable resistance levels to perform computations.

Phase-Change Memory (PCM): Relies on changes between amorphous and crystalline states for analog data storage.

Memristive and Ferroelectric Devices: Offer non-volatile storage with adjustable conductance, suitable for analog operations.

These devices provide a foundation for efficient matrix-vector multiplications, a core operation in transformer models.

5. Integrating AIMC with Transformer Architectures

This image presents a neuromorphic-inspired analog in-memory computing (AIMC) architecture optimized for transformer-based computations, particularly focusing on efficient matrix multiplications and activation functions.

Accelerating Matrix-Vector Multiplications

Matrix-vector multiplication is fundamental to transformer models. AIMC can execute these operations in parallel across memory arrays. By applying voltages to rows that represent input activations and encoding weights as conductances in memory cells, AIMC leverages Ohm’s and Kirchhoff’s laws to perform rapid, simultaneous calculations—a significant speed boost over conventional digital methods.

Energy and Latency Benefits

By reducing the need to move data between separate units, AIMC drastically lowers energy consumption and inference latency. This reduction makes it ideal for real-time applications like conversational AI and autonomous systems.

Hybrid Digital-Analog Systems

A promising approach involves combining the high-precision capabilities of digital computing with the efficiency of AIMC. In such hybrid systems:

Training: Remains in the digital domain for numerical accuracy.

Inference: Can be offloaded to AIMC units to capitalize on energy savings and speed.

This synergy—enabled by hardware-software co-design—allows for robust, scalable systems that cater to diverse operational needs.

6. Challenges and Solutions in AIMC

Device Variability and Noise

Analog devices are inherently prone to variability and noise, which can lead to computational errors. Researchers are developing:

Calibration Techniques: To adjust for device imperfections.

Error-Correction Protocols: That mitigate the impact of noise.

Robust Training Algorithms: That accommodate variability without compromising model accuracy.

Managing Precision

While digital systems provide high-precision calculations, AIMC operates at lower precision due to its analog nature. Fortunately, many AI models, including transformers, are surprisingly resilient to reduced precision during inference. Advances in quantization-aware training and error-resilient architectures continue to bridge the gap between precision and performance.

System Integration and Scalability

Integrating AIMC with existing digital infrastructures poses challenges. Future systems must ensure seamless compatibility and scalability, balancing the strengths of both analog and digital domains to maximize efficiency.

7. Future Directions and Research Advances

Breakthroughs in Device Engineering

Recent innovations in memristive devices and other memory technologies have improved endurance, precision, and variability control. These advancements are vital for the reliable operation of AIMC in real-world applications.

System-Level Demonstrations

Prototypes integrating AIMC have demonstrated dramatic improvements in energy efficiency and computation speed for matrix operations—core components of transformer models. These demonstrations pave the way for wider adoption and further optimization.

Commercial Viability and Industry Adoption

For AIMC to transition from research to commercial applications, continued investment in system integration, software support, and standardized programming models is essential. Collaboration among industry, academia, and government will drive the development of robust, scalable hybrid systems that combine the best of both analog and digital computing.

8. The Road Ahead for AIMC and Transformer Models

Analog in-memory computing holds the potential to revolutionize how we process and deploy transformer models. By executing computations directly within memory, AIMC significantly reduces energy consumption and latency, addressing key challenges faced by traditional digital systems. Although hurdles like device variability, limited precision, and system integration remain, ongoing research and innovation are steadily overcoming these barriers. The future is promising for hybrid digital-analog architectures that can bring high-performance, energy-efficient AI to data centers, edge devices, and beyond.

9. Frequently Asked Questions (FAQs)

Q1: What is analog in-memory computing (AIMC)?

A1: AIMC is a computing paradigm that performs calculations directly in the memory where data is stored, reducing energy and latency by minimizing data movement.

Q2: How do transformer models benefit from AIMC?

A2: Transformer models rely heavily on matrix-vector multiplications. AIMC accelerates these operations by executing them in parallel directly within memory arrays, leading to faster and more energy-efficient processing.

Q3: What challenges does AIMC face?

A3: Key challenges include device variability, noise, limited precision, and integration with existing digital infrastructures. Researchers are actively developing solutions to address these issues.

Q4: Can AIMC work alongside traditional digital computing?

A4: Yes, hybrid systems that combine digital precision with the energy efficiency of AIMC are an emerging approach, offering the best of both worlds for training and inference.

Q5: What industries will benefit most from AIMC-enhanced transformer models?

A5: Industries ranging from data centers and cloud computing to edge devices, autonomous systems, and real-time AI applications will benefit from the improved efficiency and performance of AIMC-accelerated transformer models.

Optimize your systems, reduce energy costs, and accelerate AI development by embracing the innovative world of analog in-memory computing. - Written by: Matthew Drabek

For our Services, feel free to reach out to us via meeting…

Please share our content for further education